Function Calling: How AI Went from Chatbot to Do-It-All Intern

AI has come a long way from being just a chatbot that answers questions. Today, thanks to function calling, Large Language Models (LLMs) have evolved into capable digital interns that don’t just respond with words—they take action.

Imagine having an AI that doesn’t just suggest an appointment time but actually books it, or one that doesn’t just provide stock prices but executes trades based on predefined criteria. Function calling is the bridge between passive information processing and real-world execution, making AI not just a knowledge resource, but a true assistant that gets things done.

How Function Calling works (Layman’s version)

Imagine you have a really smart friend (the LLM, or large language model) who knows a lot but can’t actually do things on their own. Now, what if they could call for help when they needed it? That’s where tool calling (or function calling) comes in!

Here’s how it works:

You ask a question or request something – Let’s say you ask, “What’s the weather like today?” The LLM understands your question but doesn’t actually know the live weather.

The LLM calls a tool – Instead of guessing, the LLM sends a request to a special function (or tool) that can fetch the weather from the internet. Think of it like your smart friend asking a weather expert.

The tool responds with real data – The weather tool looks up the latest forecast and sends back something like, “It’s 75°F and sunny.”

The LLM gives you the answer – Now, the LLM takes that information, maybe rewords it nicely, and tells you, “It’s a beautiful 75°F and sunny today! Perfect for a walk.”

How Function Calling Works (Software Developer’s version)

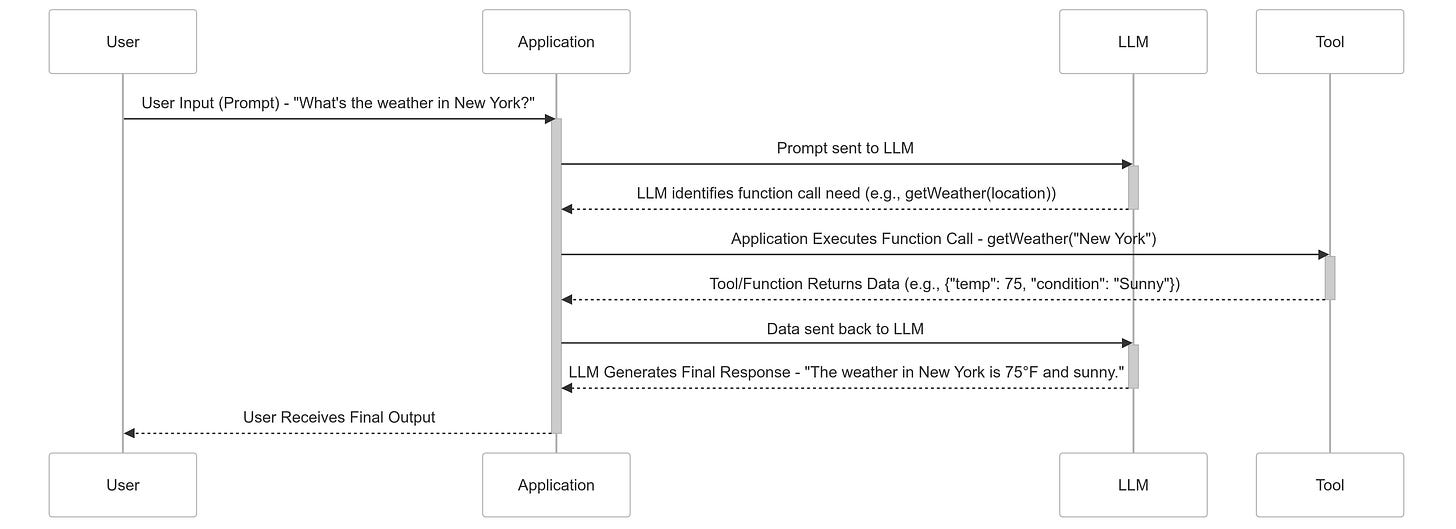

Here’s how the interaction flows between the user, LLM, the application, and external tools works:

User Input (Prompt) → Application → Sent to the LLM

The user provides an instruction, e.g., “What’s the current weather in New York?”The application forwards the user prompts to the LLM. LLM processes this and determines that answering requires live weather data, which it doesn’t have.

LLM Identifies the Need for a Function Call

The model recognizes that this query maps to an external tool (e.g.,

getWeather(location)). All available tool definitions are provided to the LLM before a request is sent to it.Instead of returning a text-based guess, the LLM generates a structured function call, like:

{ "function": "getWeather", "arguments": { "location": "New York" } }This structured output is returned to the client (e.g., an application using the LLM API).

Application Executes the Function Call

The client (your application) parses this response and routes the function call to the appropriate tool.

In this case, it might send a request to a weather API like OpenWeather or a custom backend service.

Tool/Function Returns Data

The external tool (Weather API in this case) processes the request and returns structured data, e.g.:

{ "temperature": "75°F", "condition": "Sunny" }

Application Sends Data Back to the LLM

The application sends the API response back to the LLM to format it into a user-friendly response.

LLM Generates the Final Response

The model takes the structured data and produces a natural language response, like:

“The weather in New York is 75°F and sunny. Great day to be outside!”

User Receives the Final Output

The user gets a well-formatted response, as if the LLM knew the weather all along!

If you’re a software developer and you’re interested in diving deeper, I’ve written a full tutorial on this here.

Function calling is fundamentally shifting the paradigm, paving the way for AI to evolve from passive responders to proactive problem-solvers and truly autonomous agents capable of navigating and operating within complex environments.

Want to stay abreast of the latest AI trends in software engineering and learn more about Entrepreneurship? Follow me on LinkedIn!